Deep learning thrives on large datasets and powerful computation, but training a model isn’t just about feeding data into a network. One of the most critical decisions in training is choosing the right batch size. While it might seem like a technicality, batch size directly affects learning speed, accuracy, and computational efficiency.

It can dictate whether a model converges smoothly or struggles with erratic updates. Understanding batch size isn't just for researchers—anyone working with deep learning needs to grasp how this parameter influences the training process.

What is Batch Size in Deep Learning?

Batch size in deep learning is the amount of training data handled prior to adjusting the model parameters. Rather than providing all data at once, the training is administered in small batches. This method manages memory and computation usage because deep learning models often thirst for large data inputs to learn properly.

Training can occur in three modes based on batch size:

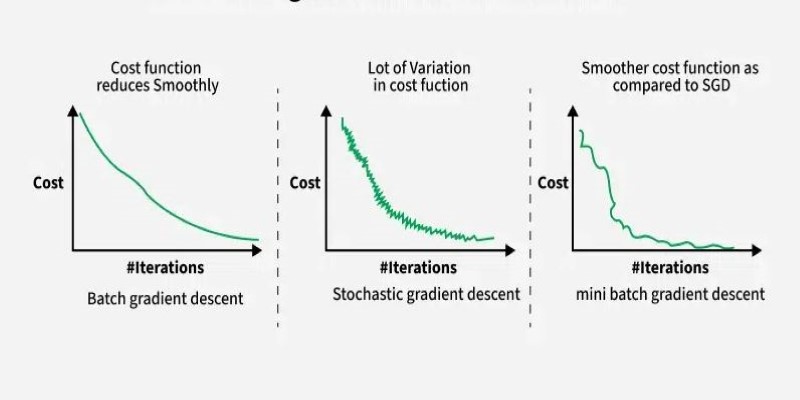

- Batch Gradient Descent – The whole dataset is utilized to calculate the gradient and adjust the model parameters. This is costly in terms of computation and not practiced in real life.

- Mini-Batch Gradient Descent – A portion of the dataset is utilized per iteration. This is the most prevalent and real-world practical method, providing a compromise between efficiency and stability.

- Stochastic Gradient Descent (SGD) – One sample is processed at a time, resulting in a lot of parameter updates. Though it uses little memory, it might produce noisy updates and converge slowly.

The majority of deep learning models use mini-batch training, which makes use of the advantages of both worlds—stability using batch processing and efficiency using splitting data into manageable chunks.

Why Batch Size Matters in Model Training?

Batch size affects nearly everything in deep learning, ranging from computation efficiency to model accuracy. Selecting an appropriate batch size is a balancing act between performance, hardware considerations, and training time.

Computational Efficiency and Memory Usage

Small batch sizes consume less memory, so they are well-suited to GPUs with low capacity. They can be slow for training since updates happen more often. Large batch sizes utilize parallelism well but need lots of computing power. If the batch size is too big for the available memory, training can crash or become impossible.

Model Generalization and Accuracy

Batch size influences how well a model generalizes to unseen data. Smaller batches introduce randomness in parameter updates, which can prevent overfitting and improve generalization. However, if the batch size is too small, training can become unstable, leading to poor convergence. Larger batches provide smoother updates but may cause models to converge to suboptimal solutions, as they rely on more aggregated information with less variability.

Training Speed and Convergence

Training efficiency depends on batch size selection. Large batches allow fewer iterations per epoch, leading to faster training times. However, smaller batches provide more frequent updates, which can help models escape local minima and find better solutions. The challenge is striking a balance—batch sizes that are too large may lead to poor convergence, while those too small may slow down training excessively.

Learning Rate and Optimization Stability

Batch size and learning rate go hand in hand. Larger batch sizes require higher learning rates to compensate for reduced update frequency. If the learning rate is too low for a large batch, training can stagnate. Conversely, smaller batches allow more granular adjustments but may require lower learning rates to prevent excessive noise in updates. Adaptive optimizers like Adam and RMSprop can help mitigate this effect, but batch size still plays a crucial role in determining the ideal learning rate.

Common Mistakes When Selecting Batch Size

Selecting the right batch size is crucial for deep learning performance, yet several common mistakes can hinder training. A common misconception is that larger batch sizes always speed up training. While they process more data at once, they may cause models to settle into sharp minima, leading to poor generalization. On the other hand, very small batch sizes introduce excessive noise in updates, slowing convergence.

Ignoring hardware constraints is another mistake—batch sizes should align with GPU memory limits to prevent crashes. Using mixed-precision training can help optimize memory usage. Additionally, failing to adjust the learning rate for different batch sizes can cause inefficient training. Larger batches need higher learning rates, while smaller batches require lower ones to ensure stability and effective learning.

How to Choose the Right Batch Size?

The ideal batch size depends on the model architecture, dataset size, and available hardware. Here are some guidelines to consider:

Start with Powers of Two: Use batch sizes like 32, 64, or 128. Modern GPUs optimize performance for these values, ensuring efficient parallel processing and faster training times.

Balance Memory and Performance: If training crashes due to memory constraints, lower the batch size. If training is too slow, gradually increase it until you find a balance between speed and stability.

Monitor Training Stability: If the model struggles to converge or performs inconsistently, reducing batch size can help. If training is too erratic, increasing it may provide more stable updates.

Experiment with Learning Rates: Larger batch sizes require higher learning rates for efficient updates, while smaller batches need lower learning rates to prevent excessive noise and unstable training behavior.

In many cases, a batch size of 32 or 64 provides a good balance of speed, stability, and accuracy. However, certain tasks, such as image classification on large datasets, may benefit from higher batch sizes when sufficient computational resources are available.

Conclusion

Batch size in deep learning isn't just a technical setting—it's a fundamental aspect of model training that affects speed, accuracy, and efficiency. Whether working with small-scale models or training complex neural networks, understanding how batch size influences convergence and generalization is key to building effective AI systems. Striking the right balance ensures efficient training while preventing common pitfalls like slow convergence or poor generalization. By experimenting with batch sizes and monitoring training dynamics, deep learning practitioners can optimize their models for better performance and stability.